Boost your career with

DataAIMachine LearningData EngineeringCloud Computing

Should you be an employee, retraining, entrepreneur or student, LePont offers training courses in Data and Artificial Intelligence to enable you to give a new dimension to your career

They rely on us

Over 400 courses, which one is right for you?

Regardless your background, give a new boost to your career or change your life with our Data and Artificial Intelligence trainings.

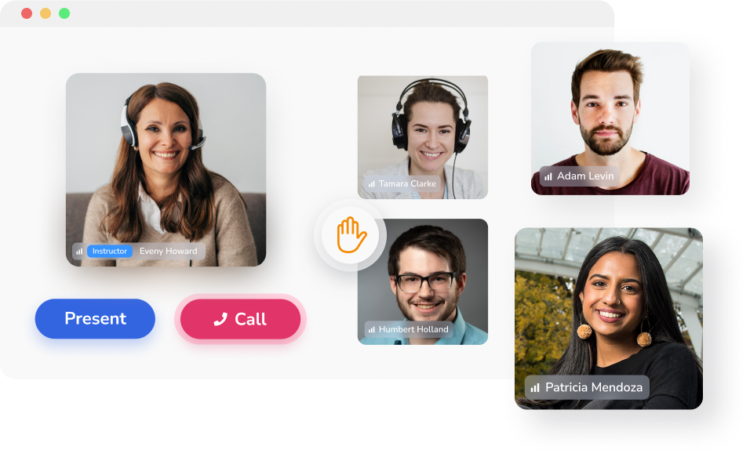

A breakthrough approach, focused on the practical acquisition of technical and behavioral skills

-

Inductive pedagogy where the learner is an integral part of the training program

-

Approach focused on measuring the business impact of the training

-

Optimal use of EdTech

and neuroscience

Unleash the potential of companies and employees

While there is no magic formula for solving the digital skills shortage that companies face today, it is clear that training is a central key